Summary: Structured data implementation is foundational for competitive SEO in London, influencing visibility in traditional search, voice search, and AI-driven results. This analysis by OnDigital examines the necessity of complete JSON-LD schema markup. We argue that attempting to cherry-pick schema or relying on the minimum required properties sends confusing signals to search engines, damages entity understanding, and reduces ranking potential. A complete schema implementation is mandatory for effective digital marketing.

Minimum Required Schema for SEO Will Not Secure Top Rankings in London

Is your website speaking a language search engines fully understand? If you are deploying partial JSON-LD schema, the answer is likely no. Many businesses implement the bare minimum structured data required to clear validation tools, believing this is sufficient. As a digital agency focused on results, we view this approach to schema implementation as fundamentally flawed.

Structured data is the vocabulary used to describe your content and business entities to machines. In the hyper-competitive London market, clarity is required. To be effective, a schema markup strategy must be thorough. Relying on incomplete data risks more than just missing out on rich snippets; it risks confusing Google about who you are and what you offer.

The Precision Required in Structured Data Implementation

Schema markup, often implemented using JSON-LD, translates the content of a webpage into structured data. This data feeds algorithms, including Google’s Knowledge Graph, and informs Large Language Models (LLMs). The process is about entity SEO—defining things and their relationships.

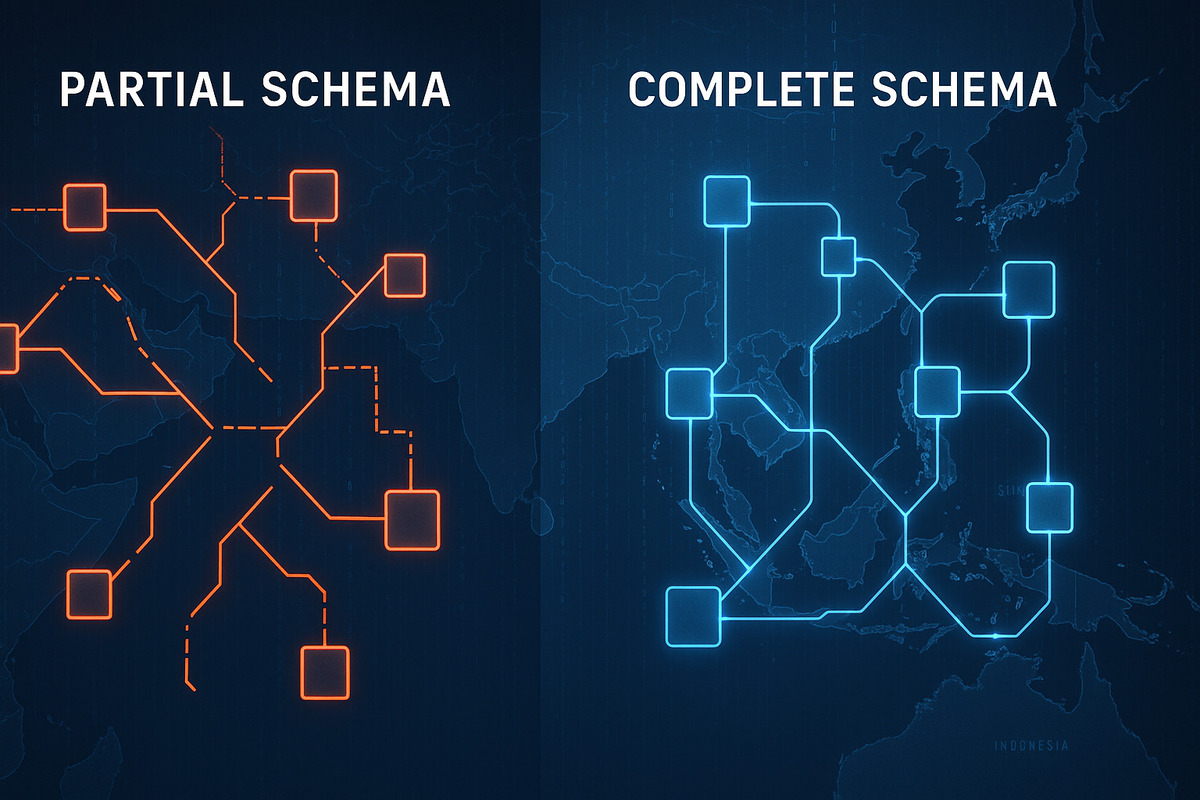

When considering complete vs. partial schema, understand that search engines desire maximum information. They use this information to match user intent with the most relevant and trustworthy results. If you provide a LocalBusiness schema for a London-based firm, search engines want to know more than just the name and address. They want operating hours, social profiles, specific boroughs served, price ranges in GBP, departmental contacts, and official identifiers (e.g., Companies House number).

A complete schema implementation provides this depth. It leaves no ambiguity. The benefits of complete schema markup include better contextual understanding by search engines, leading to improved relevance scoring. When algorithms have high confidence in the data provided, they are more likely to rank the source highly.

Mixed Signals: The Impact of Incomplete Structured Data

Incomplete schemas generate confusion. Imagine providing a Product schema without availability or UK-specific Offer details. The search engine sees an item but cannot determine if it can be purchased in the UK. This ambiguity forces the algorithms to make assumptions, and those assumptions rarely favor the website owner.

If you cherry-pick schema properties, you create an inconsistent narrative. For example, if your Organization schema lacks the sameAs property linking to your verified LinkedIn profile or industry accreditation bodies, search engines may struggle to verify your entity’s legitimacy. This verification is tied directly to E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness), which is critical in competitive sectors within London.

The impact of incomplete structured data is a weakened entity definition. Search engines prioritize clear, verifiable information. Mixed signals resulting from partial implementation often lead to your content being superseded by competitors who provide a fuller picture. Schema validation tools may show green lights for required properties, but recommended properties are often where the real strategic advantage lies. Common schema implementation mistakes involve ignoring these recommended fields.

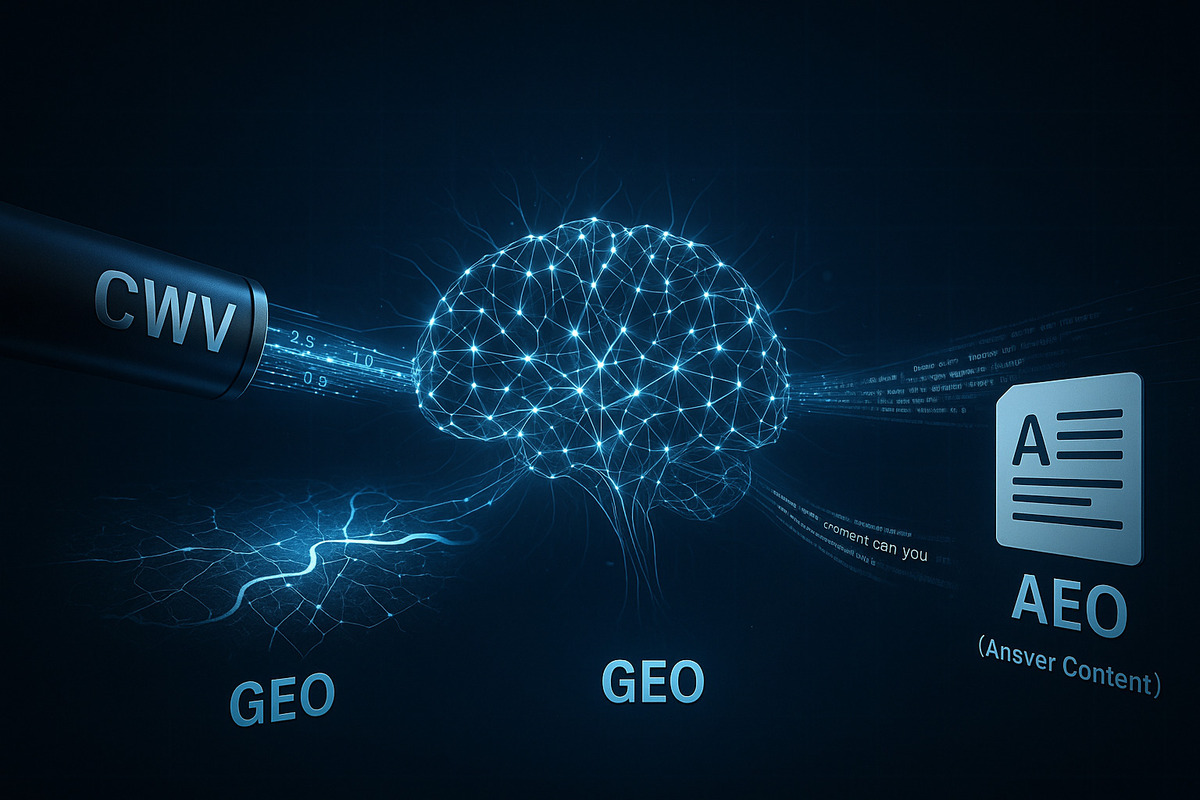

The Risks to SEO, AEO, and LLM Optimization

The consequences of partial schema extend across the entire search ecosystem. It is not just about traditional Search Engine Optimization (SEO). It affects Answer Engine Optimization (AEO), Geographic Optimization (GEO), and Large Language Model Optimization (LLMO).

SEO and Rich Snippets

Does schema affect Google rankings? Directly and indirectly. Complete schema is necessary for eligibility for many rich snippets. These enhanced search results increase click-through rates significantly. Partial schema may qualify you for basic enhancements, but the most impactful features (like pricing in SERPs, FAQ dropdowns, or review stars) require detailed markup.

AEO and Voice Search

Answer engines and voice assistants rely heavily on structured data to provide direct answers. If a user in London asks, “What time does [Your Business] close today?”, a complete LocalBusiness schema provides that information instantly. Incomplete schema means the assistant cannot answer, and you lose that interaction. AEO demands precise data extraction.

GEO (Geographic Optimization)

For businesses targeting the London area, precise geographic data within the schema is essential. Properties like serviceArea (e.g., “Greater London,” “City of Westminster”) and precise geoCoordinates help search engines understand your local relevance and proximity. Omitting these details weakens your local search signals within the M25.

LLMO (Large Language Model Optimization)

AI models are trained on vast datasets, including structured data. When LLMs ingest your website’s information, complete schema provides accurate, organized facts. This increases the likelihood that AI-driven search experiences (like Google SGE) and chatbots will reference your business accurately and favorably. Incomplete schema can lead to AI hallucinating details about your business or ignoring it entirely. Incomplete schemas can hurt your Search and LLM Ranking by providing low-quality source data.

Why Cherry-Picking Schema Fails

Attempting to cherry-pick schema—selecting only the easiest properties to implement—is like firing a gun with your eyes closed. You might hit something, but you are unlikely to hit the target. This approach lacks strategy and foresight.

Search algorithms are designed to identify patterns and relationships. When data is sparse, relationships cannot be established. A schema markup strategy requires defining the primary entity on the page and then describing it fully, nesting related entities within the main structure.

For instance, an Article schema should not just have a headline and author name. It needs datePublished, dateModified, about (the topic), mentions (related entities), and detailed Author information nested within it, including the author’s credentials. Is partial schema markup effective? Only if your goal is minimal impact.

When you decide to use multiple schema types on one page, they must be interconnected and complete to form a coherent graph. Disjointed, partial schemas create noise, not signal.

Addressing Implementation Challenges: The Agency Perspective

A common objection to thorough schema implementation is the perceived difficulty. We often hear from prospective clients or other marketers, “We cannot always get all the specific information needed. This is going to add time to the project and extra administration.”

Gathering detailed information—like professional accreditations, precise geo-coordinates, or departmental phone numbers—does require effort. It adds time to the onboarding or content creation process. This administrative overhead is a real concern.

The rebuttal from OnDigital is straightforward: If you do it right from the start, you never have to worry about it again. The time invested in building a complete schema template pays dividends indefinitely. It is a foundational element of technical SEO. Viewing this process as an investment rather than an expense is necessary.

Incomplete work will eventually need correction, often requiring more time later when SEO performance stagnates in the competitive London environment. Establishing standardized processes to collect this data during onboarding is the professional standard we adhere to.

How to Prioritize Schema Implementation for Maximum Effect

Developing a sound schema markup strategy involves identifying the most critical schemas for your business model and ensuring their complete implementation.

- Identify Primary Entities: Determine what your business offers. Are you a

LocalBusiness, an e-commerce store (Productschemas), a publisher (NewsArticleorBlogPosting), or a service provider (Serviceschema)? - Fulfill All Required and Recommended Properties: Use Google Search Console and tools for testing schema markup (like the Schema Markup Validator) to identify not just required properties, but all relevant recommended properties.

- Nest and Interlink: Do not just place blocks of schema independently. Nest related items. An

Articleis written by anAuthor(which is aPersonorOrganization), which is part of aWebPage, which is part of aWebSite. This interlinking is fundamental to Entity SEO. - Validate and Monitor: Implementation is not the end. Monitor Google Search Console for errors or warnings in the Enhancements reports. Schema standards evolve, and maintenance is required.

The minimum required schema for SEO is merely the entry point. For businesses aiming to dominate London search results, maximizing the potential of structured data is required.

The Mandate for Complete Information

The debate between complete vs. partial schema implementation is settled by the requirements of modern search algorithms. Search engines and LLMs demand clarity, detail, and verifiable information. Providing anything less compromises your digital strategy.

Incomplete schema damages your ability to rank, reduces your eligibility for enhanced search features, and muddles the understanding of your business entity. A thorough schema markup strategy is not optional; it is a prerequisite for sustainable SEO success. Do not cherry-pick your data; provide the complete picture.